PLANET: Dynamic Content Planning in Autoregressive Transformers for Long-form Text Generation

Zhe Hu, Hou Pong Chan, Jiachen Liu, Xinyan Xiao, Hua Wu, Lifu Huang (ACL 2022)

Abstract:

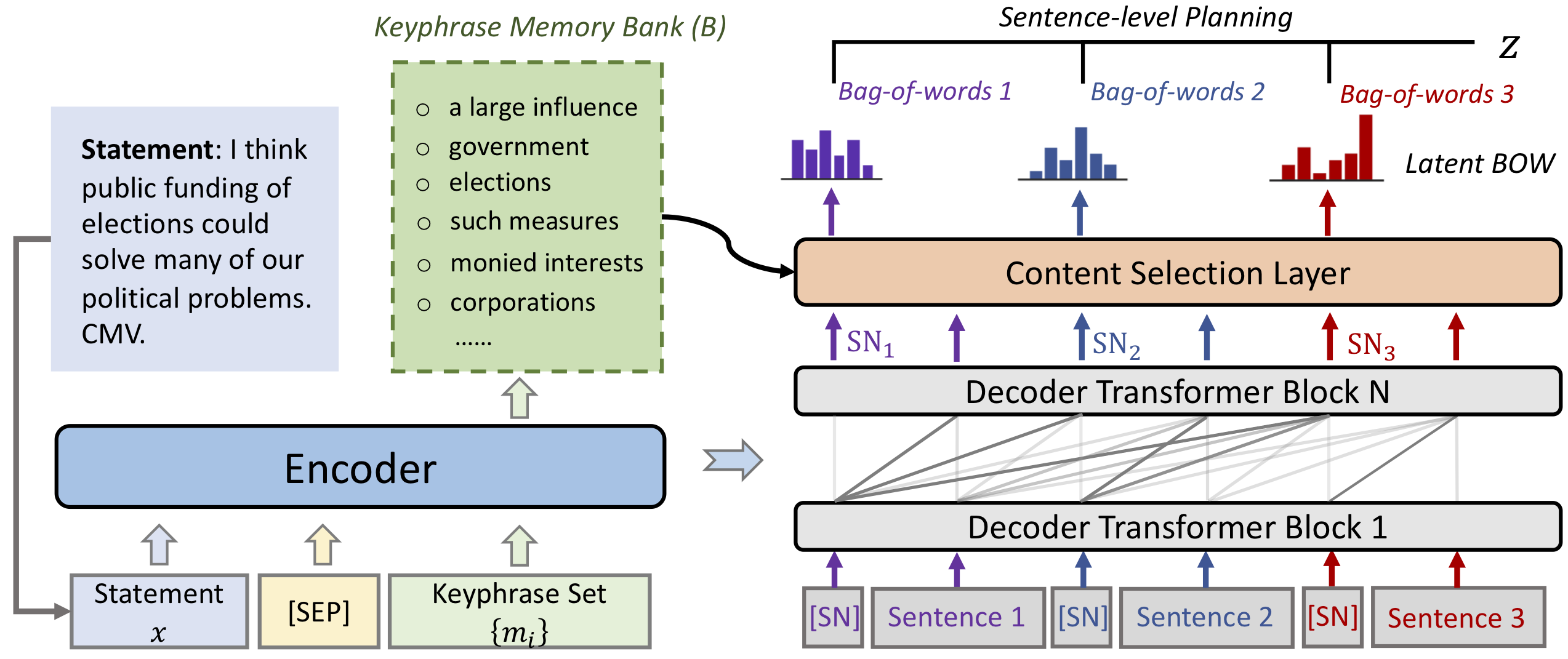

Despite recent progress of pre-trained language models on generating fluent text, existing models still suffer from incoherence in long-form text generation tasks that require proper content control and planning to form a coherent high-level logical flow. In this work, we propose PLANET, a novel generation framework leveraging autoregressive self-attention mechanism to conduct content planning and surface realization dynamically. To guide the generation of output sentences, our framework enriches the Transformer decoder with latent representations to maintain sentence-level semantic plans grounded by bag-of-words. Moreover, we introduce a new coherence-based contrastive learning objective to further improve the coherence of output. Extensive experiments are conducted on two challenging opinion generation tasks including counter-argument generation and opinion article generation. Both automatic and human evaluations show that our method significantly outperforms strong baselines and generates more coherent text with richer contents.

Code and Data:

Stay tuned for more updates!!!